27.01.2026

Carina Newen reveals how optical illusions help explain and improve artificial intelligence.

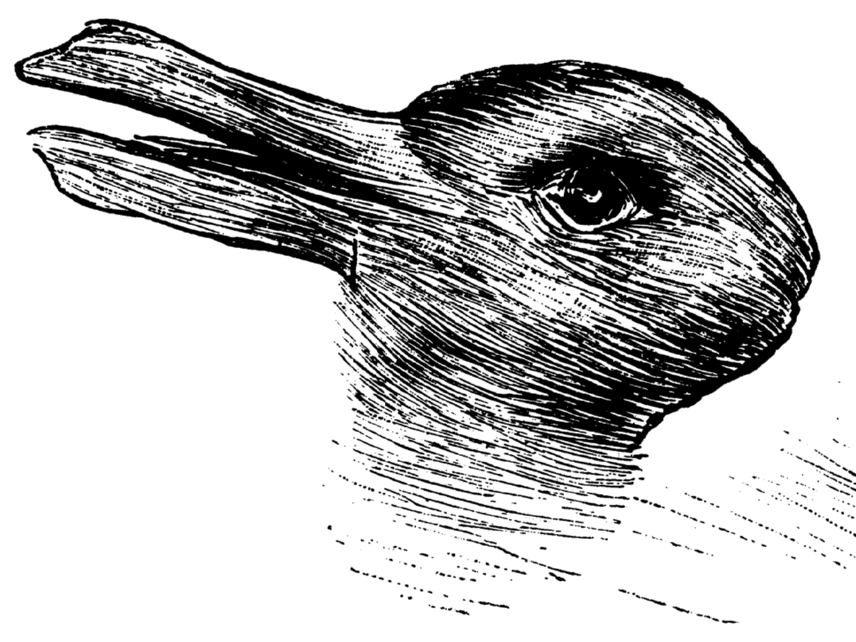

Photo: duck-rabbit

Photo: duck-rabbit

Optical illusions have fascinated people for centuries. They surprise us, confuse us – and challenge how we see the world. This moment of uncertainty is exactly where the new research paper by Dr. Carina Newen begins.

In her recently released arXiv paper, Newen shows that artificial intelligence struggles with ambiguous images in ways that are surprisingly similar to humans. The paper introduces a new dataset made of carefully designed optical illusions. Each image combines two animals into one, sharing visual features so closely that it is hard to tell them apart. Often, the decisive clue is something subtle – such as the direction the animal appears to be looking.

This is where the research becomes especially relevant for Explainable AI (XAI). Many existing XAI methods try to explain AI decisions by highlighting important pixels or regions in an image. Newen’s work demonstrates that this approach often fails in ambiguous cases. When two classes share the same visual features, pixel-based explanations do not help people understand why a model chose one interpretation over another.

The new dataset makes this limitation visible and testable. It allows researchers to evaluate not only how well AI models perform on difficult, ambiguous data, but also whether their explanations are meaningful to humans. A key finding is that adding concept-level information – such as eye position or gaze direction – can significantly improve both accuracy and interpretability.

This research reflects the broader approach of the RC Trust, where technical AI research is combined with human-centered perspectives. By bringing together computer science, perception research, and questions of human understanding, the work shows how trustworthy AI depends on more than performance alone – it also depends on interpretability and alignment with human reasoning.

The dataset is openly available and can already be used by researchers, students, and anyone interested in explainable AI. It offers a concrete example of why transparency in AI is both a technical and a societal issue.

Carina Newen was the first doctoral researcher of the RC Trust and successfully defended her PhD in winter 2025. This paper marks both a scientific contribution and an important milestone for the research center.

Links:

Category

- Publication